In my previous post Serving 200 million requests per day with a cgi-bin, I did some quick performance testing of CGI using a program written in Go.

Go works excellently for CGI programs, for many of the same reasons it works so well for CLI programs and system daemons.

But, out of curiosity, I decided to do a bit more CGI testing with other languages.

CGI is good technology, actually

There’s a misconception that because CGI is old or because many CGI scripts had security vulnerabilities, CGI itself is somehow insecure or bad.

That’s just not the case. CGI is a simple protocol that works very well.

It’s not any more or less difficult to write secure CGI programs than it is to write any other kind of HTTP handler code.

The common alternatives to CGI, FastCGI and reverse proxies, aren’t a free lunch and have their own security complications.

The benchmarking server

This time I used an AMD Genoa-based 60 vCPU / 240 GB RAM virtual machine to serve as a reasonable medium-sized machine.

Running benchmarks in VMs isn’t ideal because of noisy neighbor problems and other sources of variable performance.

However, when doing macrobenchmarking, it’s less of a concern and the results are fairly consistent. This is even more true when using a larger VM, where there are fewer neighbors on the host.

Still, I do always prefer bare metal, but sometimes you leave your servers behind for other people to enjoy.

I miss “my” beautiful servers but they’re in good hands and at least I can still post to them and visualize the disk and network IO and CPU usage, which isn’t creepy to do, it’s actually perfectly normal.

I do not miss Nandos in DC and their unrefrigerated sauces!

— Jake Gold (@jacob.gold) November 12, 2024 at 8:11 PM

[image or embed]

Standard benchmarking disclaimer

Benchmarking of any kind is fraught, and it’s easy to make mistakes, which is why there’s no substitute for real-world testing in your own environment.

- The CGI programs written in each language are broadly similar but their implementations do vary. Some use well-tested libraries while others do manual parsing and are minor abominations.

- The HTTP load testing tool vegeta was used this time for improved accuracy.

- Only

gohttpdwebserver was used this time because getting Apache to stop being the bottleneck proved somewhat difficult. - The updated code and Dockerfiles are on GitHub https://github.com/Jacob2161/cgi-bin

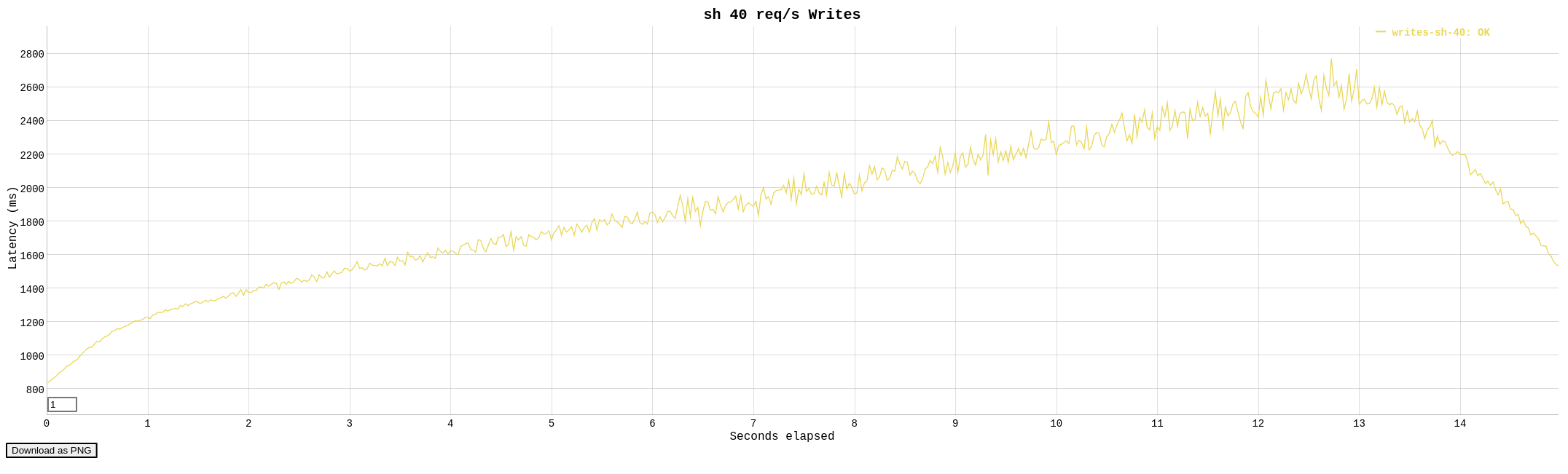

Benchmarking Bash guestbook-sh.cgi

No one should ever run a Bash script under CGI. It’s almost impossible to do so securely, and performance is terrible.

But it’s kind of funny to see that it does actually work.

Bash reached just 40 requests per second before saturating all available CPUs.

Requests [total, rate, throughput] 600, 40.07, 36.34

Duration [total, attack, wait] 16.509s, 14.975s, 1.534s

Latencies [min, mean, 50, 90, 95, 99, max] 838.76ms, 1.908s, 1.924s, 2.48s, 2.547s, 2.655s, 2.77s

Bytes In [total, mean] 6756600, 11261.00

Bytes Out [total, mean] 31200, 52.00

Success [ratio] 100.00%

Status Codes [code:count] 200:600

Error Set:

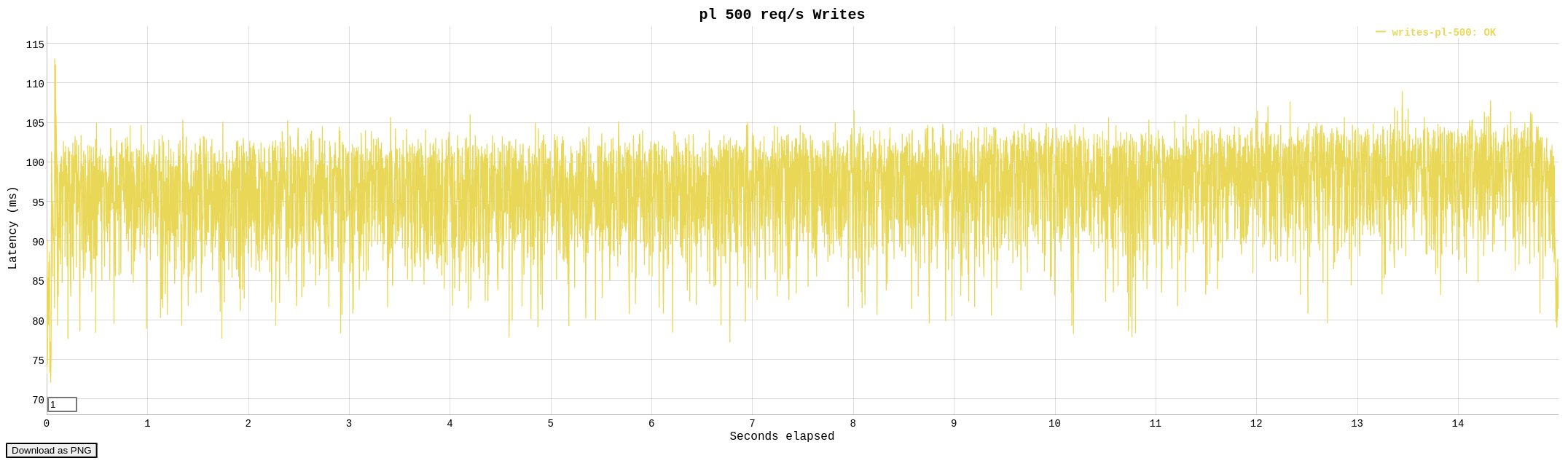

Benchmarking Perl guestbook-pl.cgi

Perl shows decent performance for a scripting language, managing 500 requests per second. The latency distribution is quite consistent.

Requests [total, rate, throughput] 7500, 500.04, 497.25

Duration [total, attack, wait] 15.083s, 14.999s, 84.166ms

Latencies [min, mean, 50, 90, 95, 99, max] 72.106ms, 96.842ms, 98.021ms, 102.438ms, 103.292ms, 104.728ms, 113.681ms

Bytes In [total, mean] 81585000, 10878.00

Bytes Out [total, mean] 390000, 52.00

Success [ratio] 100.00%

Status Codes [code:count] 200:7500

Error Set:

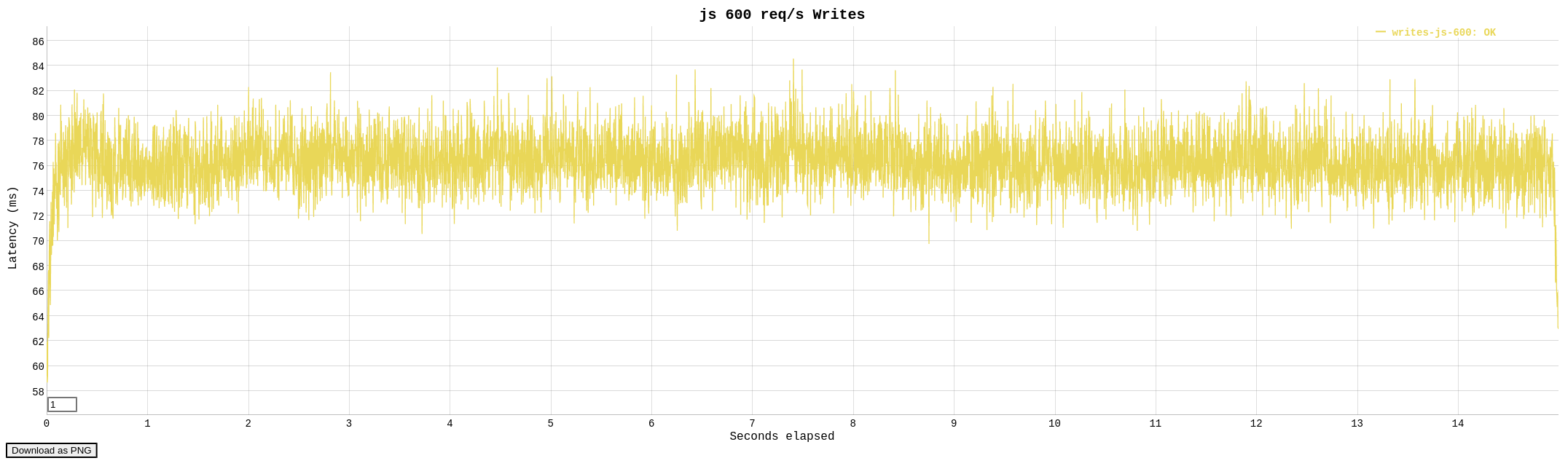

Benchmarking JavaScript guestbook-js.cgi

JavaScript with Node.js surprised me a lot by performing much better than I would have expected in a CGI environment, hitting 600 requests per second with very consistent latencies.

Requests [total, rate, throughput] 9000, 600.07, 597.56

Duration [total, attack, wait] 15.061s, 14.998s, 62.961ms

Latencies [min, mean, 50, 90, 95, 99, max] 57.999ms, 76.306ms, 76.271ms, 78.824ms, 79.563ms, 80.983ms, 84.569ms

Bytes In [total, mean] 96858000, 10762.00

Bytes Out [total, mean] 468000, 52.00

Success [ratio] 100.00%

Status Codes [code:count] 200:9000

Error Set:

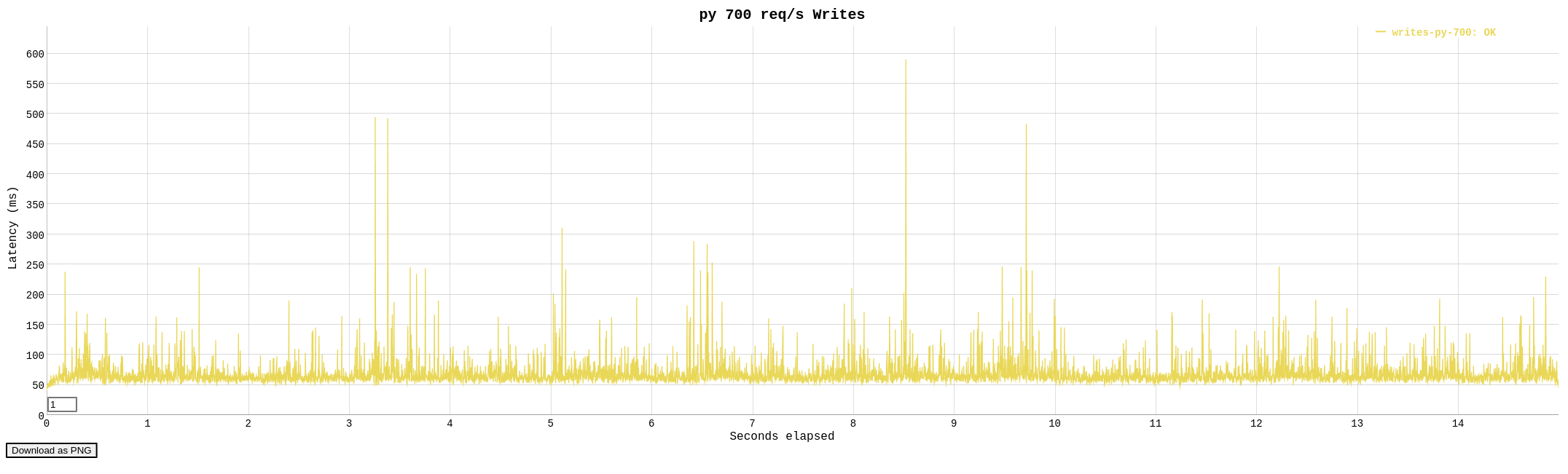

Benchmarking Python guestbook-py.cgi

Python manages 700 requests per second, which seems respectable.

Requests [total, rate, throughput] 10500, 700.11, 695.36

Duration [total, attack, wait] 15.1s, 14.998s, 102.49ms

Latencies [min, mean, 50, 90, 95, 99, max] 44.186ms, 66.602ms, 62.544ms, 78.77ms, 93.006ms, 142.416ms, 590.895ms

Bytes In [total, mean] 113001000, 10762.00

Bytes Out [total, mean] 546000, 52.00

Success [ratio] 100.00%

Status Codes [code:count] 200:10500

Error Set:

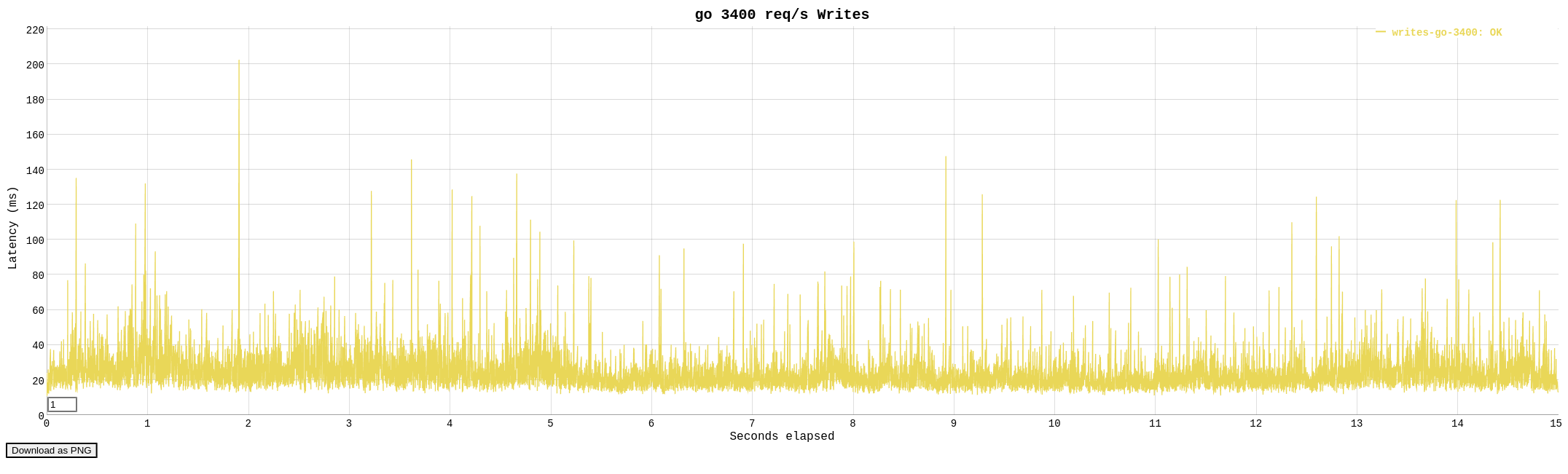

Benchmarking Go guestbook-go.cgi

Even though Go is a very fast compiled language, it does have a runtime that must be initialized on startup.

Despite this initialization overhead, Go reached 3,400 requests per second with low latencies, which still places it in the “very fast” tier of languages.

Requests [total, rate, throughput] 51000, 3399.39, 3396.04

Duration [total, attack, wait] 15.017s, 15.003s, 14.786ms

Latencies [min, mean, 50, 90, 95, 99, max] 10.456ms, 21.817ms, 20.458ms, 29.03ms, 33.001ms, 43.833ms, 202.566ms

Bytes In [total, mean] 559062000, 10962.00

Bytes Out [total, mean] 2652000, 52.00

Success [ratio] 100.00%

Status Codes [code:count] 200:51000

Error Set:

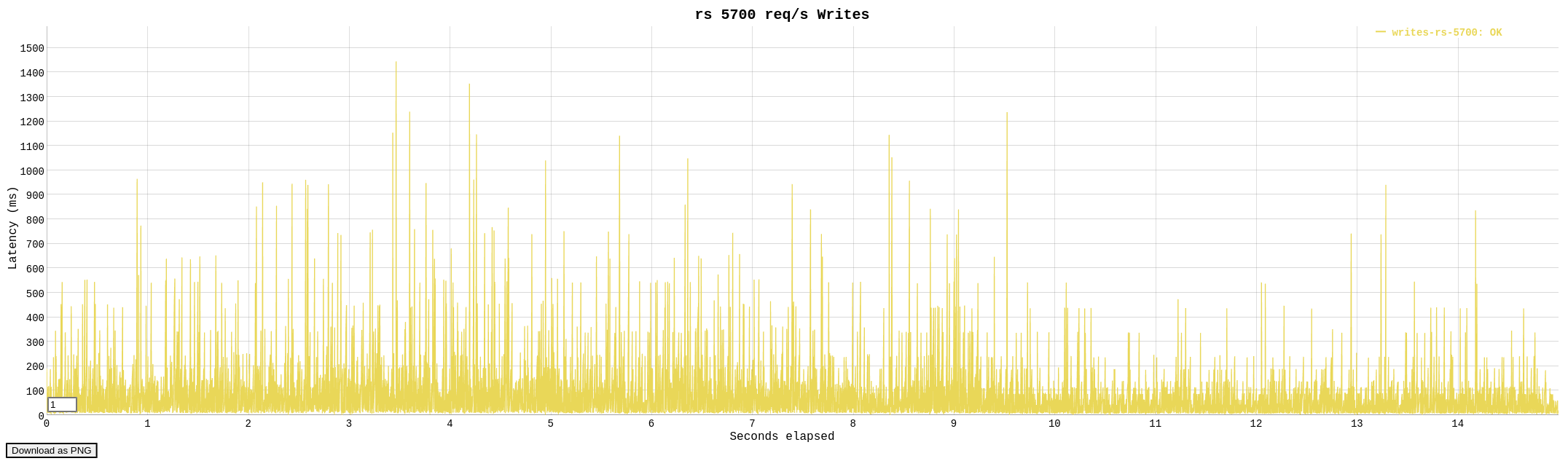

Benchmarking Rust guestbook-rs.cgi

Rust hits nearly 5,700 requests per second!

The tail latency appears oddly high (probably SQLite database contention?), but the median latency is extremely good.

Requests [total, rate, throughput] 85493, 5699.52, 5660.27

Duration [total, attack, wait] 15.104s, 15s, 103.997ms

Latencies [min, mean, 50, 90, 95, 99, max] 4.35ms, 26.28ms, 15.223ms, 47.883ms, 79.299ms, 186.667ms, 1.444s

Bytes In [total, mean] 928624966, 10862.00

Bytes Out [total, mean] 4445636, 52.00

Success [ratio] 100.00%

Status Codes [code:count] 200:85493

Error Set:

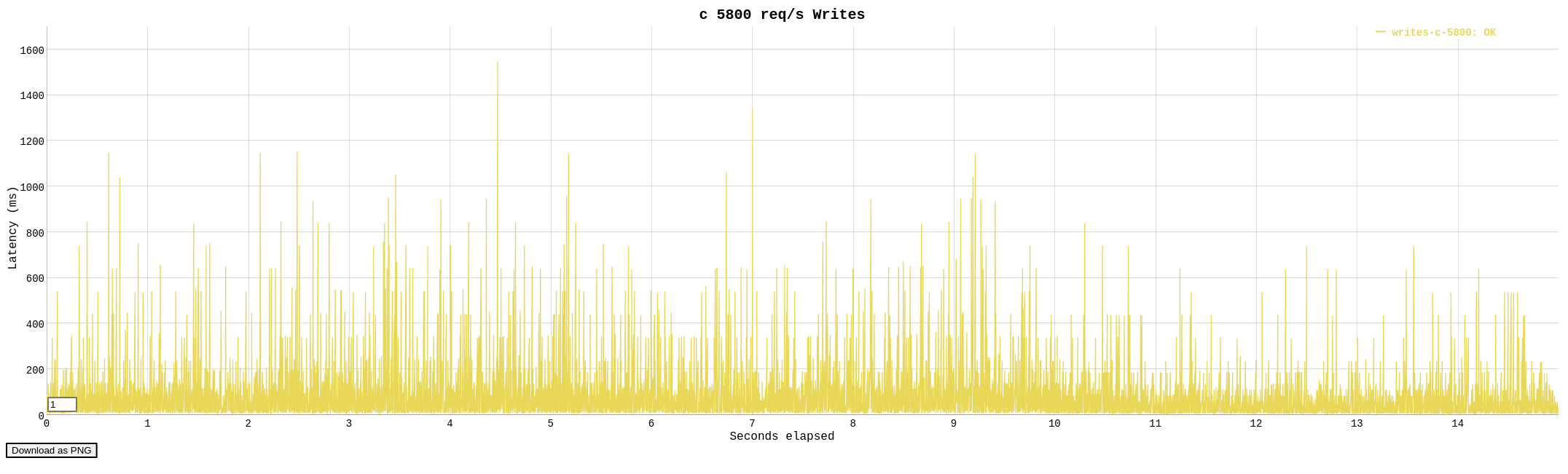

Benchmarking C guestbook-c.cgi

C performance is very similar to Rust, just slightly better, which is the natural order of things.

Requests [total, rate, throughput] 87000, 5799.88, 5750.31

Duration [total, attack, wait] 15.13s, 15s, 129.309ms

Latencies [min, mean, 50, 90, 95, 99, max] 3.741ms, 26.052ms, 14.375ms, 47.567ms, 84.977ms, 196.932ms, 1.547s

Bytes In [total, mean] 946125000, 10875.00

Bytes Out [total, mean] 4524000, 52.00

Success [ratio] 100.00%

Status Codes [code:count] 200:87000

Error Set:

My takeaways

It’s clear that CGI is fast enough with compiled languages that it can be used for real work, even if it’s almost never going to be the highest performance option.

It was also very fun to see the relative performance of the different languages play out in the now uncommon environment of CGI.

I love elegant, simple, and powerful technologies like CGI!

Links

- Find me @jacob.gold on Bluesky